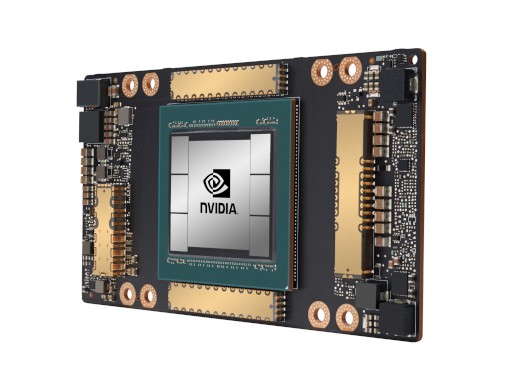

A100 is part of the complete NVIDIA data center solution that incorporates building blocks across hardware, networking, software, libraries, and optimized AI models and applications from NGC™. Representing the most powerful end-to-end AI and HPC platform for data centers, it allows researchers to deliver real-world results and deploy solutions into production at scale.

NVIDIA A100 TENSOR CORE GPU

- GPU Memory: 40 GB

- Peak FP16 Tensor Core: 312 TF

- System Interface: 4/8 SXM on NVIDIA HGX A100

About

The Most Powerful End-to-End AI and HPC Data Center Platform

Specification

Specifications

Peak FP64

9.7 TF

Peak FP64 Tensor Core

19.5 TF

Peak FP32

19.5 TF

Peak FP32 Tensor Core

156 TF | 312 TF*

Peak BFLOAT16 Tensor Core

312 TF | 624 TF*

Peak FP16 Tensor Core

312 TF | 624 TF*

Peak INT8 Tensor Core

624 TOPS | 1,248 TOPS*

Peak INT4 Tensor Core

1,248 TOPS | 2,496 TOPS*

GPU Memory

40 GB

GPU Memory Bandwidth

1,555 GB/s

Interconnect

NVIDIA NVLink 600 GB/s

PCIe Gen4 64 GB/s

Multi-instance GPUs

Various instance sizes with up to 7MIGs @5GB

Form Factor

4/8 SXM on NVIDIA HGX™ A100

Max TDP Power

400W

You May Also Like

Related products

-

NVIDIA H200 NVL

SKU: 900-21010-0040-000The GPU for Generative AI and HPC The NVIDIA H200 Tensor Core GPU supercharges generative AI and high-performance computing (HPC) workloads with game-changing performance and memory capabilities. As the first GPU with HBM3e, the H200’s larger and faster memory fuels the acceleration of generative AI and large language models (LLMs) while advancing scientific computing for ...More Information -

NVIDIA TESLA V100-32GB

SKU: 900-2G500-0010-000More Information- GPU Memory: 32GB HBM2

- CUDA Cores: 5120

- NVIDIA Tensor Cores: 640

- Single-Precision Performance: 14 TeraFLOPS

-

NVIDIA QUADRO RTX8000 PASSIVE

SKU: 900-2G150-0050-000More Information- GPU Memory: 48 GB GDDR6 with ECC

- CUDA Cores: 4608

- NVIDIA Tensor Cores: 576

- NVIDIA RT Cores: 72

Our Customers

Previous

Next