NVIDIA TESLA V100-32GB

SKU 900-2G500-0010-000

Categories GPU and Devices, Professional GPU

Tags gpu, nvidia, tesla, v100

Brand: NVIDIA

- GPU Memory: 32GB HBM2

- CUDA Cores: 5120

- NVIDIA Tensor Cores: 640

- Single-Precision Performance: 14 TeraFLOPS

About

Every industry wants intelligence. Within their ever-growing lakes of data lie insights that can provide the opportunity to revolutionize entire industries: personalized cancer therapy, predicting the next big hurricane, and virtual personal assistants conversing naturally. These opportunities can become a reality when data scientists are given the tools they need to realize their life’s work.

NVIDIA® Tesla® V100 is the world’s most advanced data center GPU ever built to accelerate AI, HPC, and graphics. Powered by NVIDIA Volta™, the latest GPU architecture, NVIDIA® Tesla® V100 offers the performance of 100 CPUs in a single GPU—enabling data scientists, researchers, and engineers to tackle challenges that were once impossible.

Specification

Specifications

GPU Architecture

NVIDIA VOLTA

NVIDIA CUDA® Cores

5120

Double-Precision Performance

7.5 TeraFLOPS

Single-Precision Performance

15 TeraFLOPS

DEEP LEARNING

120 TeraFLOPS

INTERCONNECT BANDWIDTH

NVLINK 300GB/s

GPU Memory

32 GB CoWoS HBM2

Memory Bandwidth

900 GB/s

Max Power Consumption

250 W

You May Also Like

Related products

-

NVIDIA TESLA P100

SKU: N/AMore Information- GPU Memory: 16 CoWoS HBM2

- CUDA Cores: 3584

- Single-Precision Performance: 9.3 TeraFLOPS

- System Interface: x16 PCIe Gen3

-

NVIDIA H200 NVL

SKU: 900-21010-0040-000The GPU for Generative AI and HPC The NVIDIA H200 Tensor Core GPU supercharges generative AI and high-performance computing (HPC) workloads with game-changing performance and memory capabilities. As the first GPU with HBM3e, the H200’s larger and faster memory fuels the acceleration of generative AI and large language models (LLMs) while advancing scientific computing for ...More Information -

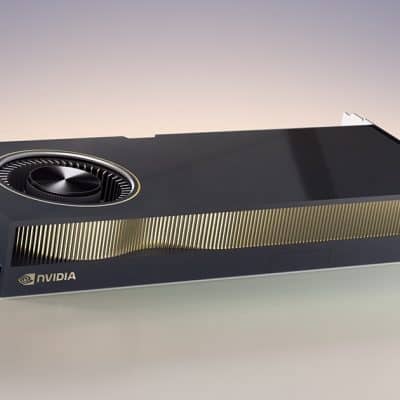

NVIDIA RTX 6000 Ada Generation

SKU: 900-5G133-2550-000More Information- NVIDIA Ada Lovelace Architecture

- DP 1.4 (4)

- PCI Express 4.0 x16

Our Customers

Previous

Next