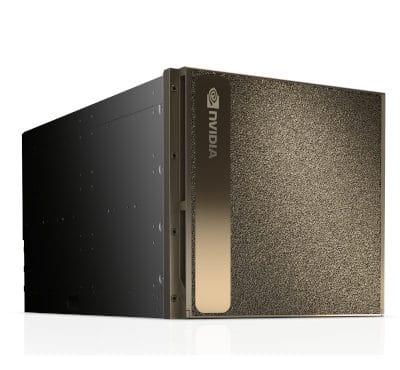

Designed for AI Reasoning Performance

The NVIDIA GB300 NVL72 features a fully liquid-cooled, rack-scale design that unifies 72 NVIDIA Blackwell Ultra GPUs and 36 Arm®-based NVIDIA Grace™ CPUs in a single platform optimized for test-time scaling inference. AI factories powered with the GB300 NVL72 using NVIDIA Quantum-X800 InfiniBand or Spectrum™-X Ethernet paired with ConnectX®-8 SuperNICS provide a 50x higher output for reasoning model inference compared to the NVIDIA Hopper™ platform.

NVIDIA GB300 NVL72

- AI Reasoning Inference

- 288 GB of HBM3e

- NVIDIA Blackwell Architecture

- NVIDIA ConnectX-8 SuperNIC

- NVIDIA Grace CPU

- Fifth-Generation NVIDIA NVLink

About

Specification

Specifications

Configuration

72 NVIDIA Blackwell Ultra GPUs, 36 NVIDIA Grace CPUs

NVLink Bandwidth

130 TB/s

Fast Memory

Up to 40 TB

GPU Memory | Bandwidth

Up to 21 TB | Up to 576 TB/s

CPU Memory | Bandwidth

Up to 18 TB SOCAMM with LPDDR5X | Up to 14.3 TB/s

CPU Core Count

2,592 Arm Neoverse V2 cores

FP4 Tensor Core

1,400 | 1,100² PFLOPS

FP8/FP6 Tensor Core

720 PFLOPS

INT8 Tensor Core

23 PFLOPS

FP16/BF16 Tensor Core

360 PFLOPS

TF32 Tensor Core

180 PFLOPS

FP32

6 PFLOPS

FP64 / FP64 Tensor Core

100 TFLOPS

You May Also Like

Related products

-

NVIDIA TESLA P40

SKU: N/AMore Information- GPU Memory: 24 GB

- CUDA Cores: 3840

- Single-Precision Performance: 12 TeraFLOPS

- System Interface: x16 PCIe Gen3

-

NVIDIA DGX-2

SKU: N/AMore Information- WORLD’s FIRST 2 PETAFLOPS SYSTEM

- ENTERPRISE GRADE AI INFRASTRUCTURE

- 12 TOTAL NVSWITCHES + 8 EDR INFINIBAND/100 GbE ETHERNET

- 2 INTEL PLATINUM CPUS + 1.5 TB SYSTEM MEMORY + DUAL 10/25 GbE ETHERNET

- 30 TB NVME SSDS INTERNAL STORAGE

-

NVIDIA DGX Station

SKU: DGXS-2511C+P2CMI00More Information- Four NVIDIA TESLA V100 GPU

- Next Generation NVIDIA NVLINK

- Water Cooling

- 1/20 Power CONSUMPTION

- Pre-installed standard Ubuntu 14.04 w/ Caffe, Torch, Theano, BIDMach, cuDNN v2, and CUDA 8.0

Our Customers

Previous

Next